Tutorial¶

Let us consider chapter 7 of the excellent treatise on the subject of Exponential Smoothing By Hyndman and Athanasopoulos [1]. We will work through all the examples in the chapter as they unfold.

[1] Hyndman, Rob J., and George Athanasopoulos. Forecasting: principles and practice. OTexts, 2014.

Exponential smoothing¶

First we load some data. We have included the R data in the notebook for expedience.

[1]:

import os

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from statsmodels.tsa.api import ExponentialSmoothing, SimpleExpSmoothing, Holt

data = [446.6565, 454.4733, 455.663 , 423.6322, 456.2713, 440.5881, 425.3325, 485.1494, 506.0482, 526.792 , 514.2689, 494.211 ]

index= pd.date_range(start='1996', end='2008', freq='A')

oildata = pd.Series(data, index)

data = [17.5534, 21.86 , 23.8866, 26.9293, 26.8885, 28.8314, 30.0751, 30.9535, 30.1857, 31.5797, 32.5776, 33.4774, 39.0216, 41.3864, 41.5966]

index= pd.date_range(start='1990', end='2005', freq='A')

air = pd.Series(data, index)

data = [263.9177, 268.3072, 260.6626, 266.6394, 277.5158, 283.834 , 290.309 , 292.4742, 300.8307, 309.2867, 318.3311, 329.3724, 338.884 , 339.2441, 328.6006, 314.2554, 314.4597, 321.4138, 329.7893, 346.3852, 352.2979, 348.3705, 417.5629, 417.1236, 417.7495, 412.2339, 411.9468, 394.6971, 401.4993, 408.2705, 414.2428]

index= pd.date_range(start='1970', end='2001', freq='A')

livestock2 = pd.Series(data, index)

data = [407.9979 , 403.4608, 413.8249, 428.105 , 445.3387, 452.9942, 455.7402]

index= pd.date_range(start='2001', end='2008', freq='A')

livestock3 = pd.Series(data, index)

data = [41.7275, 24.0418, 32.3281, 37.3287, 46.2132, 29.3463, 36.4829, 42.9777, 48.9015, 31.1802, 37.7179, 40.4202, 51.2069, 31.8872, 40.9783, 43.7725, 55.5586, 33.8509, 42.0764, 45.6423, 59.7668, 35.1919, 44.3197, 47.9137]

index= pd.date_range(start='2005', end='2010-Q4', freq='QS-OCT')

aust = pd.Series(data, index)

Simple Exponential Smoothing¶

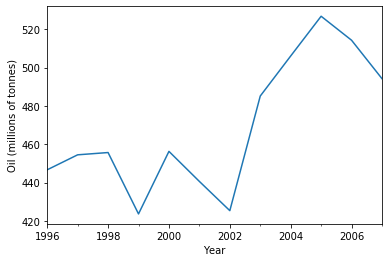

Lets use Simple Exponential Smoothing to forecast the below oil data.

[2]:

ax=oildata.plot()

ax.set_xlabel("Year")

ax.set_ylabel("Oil (millions of tonnes)")

plt.show()

print("Figure 7.1: Oil production in Saudi Arabia from 1996 to 2007.")

Figure 7.1: Oil production in Saudi Arabia from 1996 to 2007.

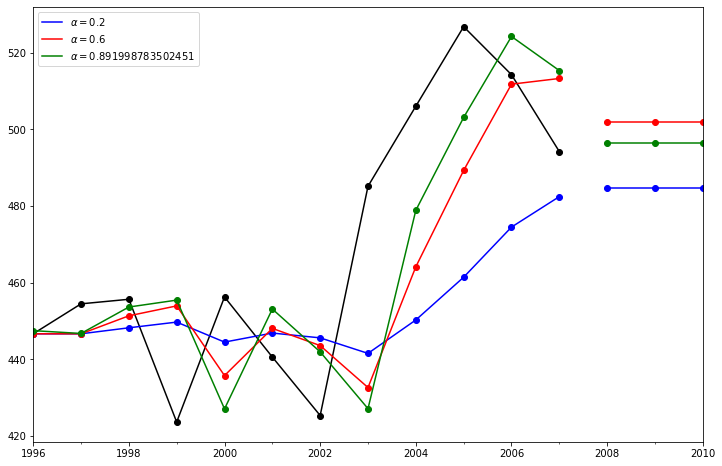

Here we run three variants of simple exponential smoothing: 1. In fit1 we do not use the auto optimization but instead choose to explicitly provide the model with the \(\alpha=0.2\) parameter 2. In fit2 as above we choose an \(\alpha=0.6\) 3. In fit3 we allow statsmodels to automatically find an optimized \(\alpha\) value for us. This is the recommended approach.

[3]:

fit1 = SimpleExpSmoothing(oildata).fit(smoothing_level=0.2,optimized=False)

fcast1 = fit1.forecast(3).rename(r'$\alpha=0.2$')

fit2 = SimpleExpSmoothing(oildata).fit(smoothing_level=0.6,optimized=False)

fcast2 = fit2.forecast(3).rename(r'$\alpha=0.6$')

fit3 = SimpleExpSmoothing(oildata).fit()

fcast3 = fit3.forecast(3).rename(r'$\alpha=%s$'%fit3.model.params['smoothing_level'])

ax = oildata.plot(marker='o', color='black', figsize=(12,8))

fcast1.plot(marker='o', ax=ax, color='blue', legend=True)

fit1.fittedvalues.plot(marker='o', ax=ax, color='blue')

fcast2.plot(marker='o', ax=ax, color='red', legend=True)

fit2.fittedvalues.plot(marker='o', ax=ax, color='red')

fcast3.plot(marker='o', ax=ax, color='green', legend=True)

fit3.fittedvalues.plot(marker='o', ax=ax, color='green')

plt.show()

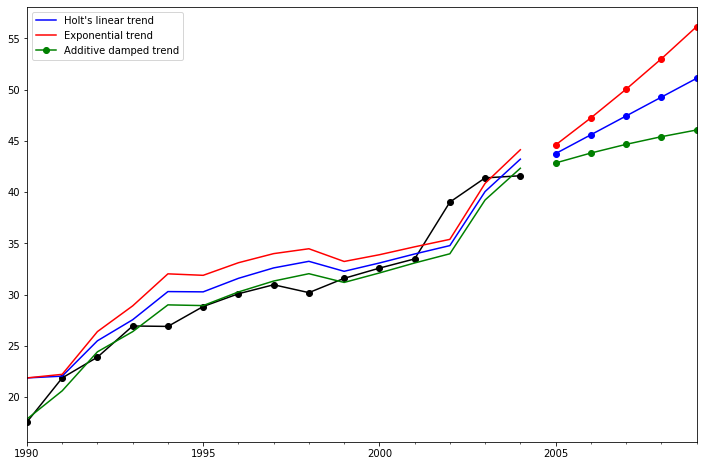

Holt’s Method¶

Lets take a look at another example. This time we use air pollution data and the Holt’s Method. We will fit three examples again. 1. In fit1 we again choose not to use the optimizer and provide explicit values for \(\alpha=0.8\) and \(\beta=0.2\) 2. In fit2 we do the same as in fit1 but choose to use an exponential model rather than a Holt’s additive model. 3. In fit3 we used a damped versions of the Holt’s additive model but allow the dampening parameter \(\phi\) to

be optimized while fixing the values for \(\alpha=0.8\) and \(\beta=0.2\)

[4]:

fit1 = Holt(air).fit(smoothing_level=0.8, smoothing_slope=0.2, optimized=False)

fcast1 = fit1.forecast(5).rename("Holt's linear trend")

fit2 = Holt(air, exponential=True).fit(smoothing_level=0.8, smoothing_slope=0.2, optimized=False)

fcast2 = fit2.forecast(5).rename("Exponential trend")

fit3 = Holt(air, damped=True).fit(smoothing_level=0.8, smoothing_slope=0.2)

fcast3 = fit3.forecast(5).rename("Additive damped trend")

ax = air.plot(color="black", marker="o", figsize=(12,8))

fit1.fittedvalues.plot(ax=ax, color='blue')

fcast1.plot(ax=ax, color='blue', marker="o", legend=True)

fit2.fittedvalues.plot(ax=ax, color='red')

fcast2.plot(ax=ax, color='red', marker="o", legend=True)

fit3.fittedvalues.plot(ax=ax, color='green')

fcast3.plot(ax=ax, color='green', marker="o", legend=True)

plt.show()

Seasonally adjusted data¶

Lets look at some seasonally adjusted livestock data. We fit five Holt’s models. The below table allows us to compare results when we use exponential versus additive and damped versus non-damped.

Note: fit4 does not allow the parameter \(\phi\) to be optimized by providing a fixed value of \(\phi=0.98\)

[5]:

fit1 = SimpleExpSmoothing(livestock2).fit()

fit2 = Holt(livestock2).fit()

fit3 = Holt(livestock2,exponential=True).fit()

fit4 = Holt(livestock2,damped=True).fit(damping_slope=0.98)

fit5 = Holt(livestock2,exponential=True,damped=True).fit()

params = ['smoothing_level', 'smoothing_slope', 'damping_slope', 'initial_level', 'initial_slope']

results=pd.DataFrame(index=[r"$\alpha$",r"$\beta$",r"$\phi$",r"$l_0$","$b_0$","SSE"] ,columns=['SES', "Holt's","Exponential", "Additive", "Multiplicative"])

results["SES"] = [fit1.params[p] for p in params] + [fit1.sse]

results["Holt's"] = [fit2.params[p] for p in params] + [fit2.sse]

results["Exponential"] = [fit3.params[p] for p in params] + [fit3.sse]

results["Additive"] = [fit4.params[p] for p in params] + [fit4.sse]

results["Multiplicative"] = [fit5.params[p] for p in params] + [fit5.sse]

results

[5]:

| SES | Holt's | Exponential | Additive | Multiplicative | |

|---|---|---|---|---|---|

| $\alpha$ | 1.000000 | 0.974306 | 0.977633 | 0.978847 | 0.974911 |

| $\beta$ | NaN | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| $\phi$ | NaN | NaN | NaN | 0.980000 | 0.981646 |

| $l_0$ | 263.917700 | 258.882647 | 260.341533 | 257.357648 | 258.951944 |

| $b_0$ | NaN | 5.010775 | 1.013780 | 6.644538 | 1.038144 |

| SSE | 6761.350218 | 6004.138200 | 6104.194746 | 6036.555004 | 6081.995045 |

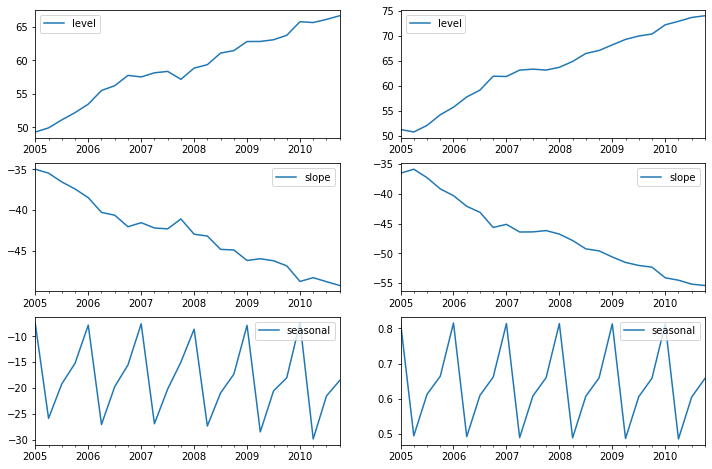

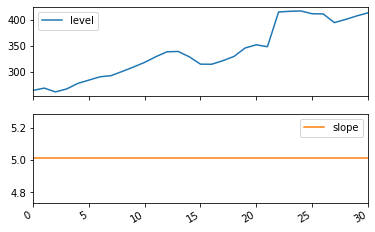

Plots of Seasonally Adjusted Data¶

The following plots allow us to evaluate the level and slope/trend components of the above table’s fits.

[6]:

for fit in [fit2,fit4]:

pd.DataFrame(np.c_[fit.level,fit.slope]).rename(

columns={0:'level',1:'slope'}).plot(subplots=True)

plt.show()

print('Figure 7.4: Level and slope components for Holt’s linear trend method and the additive damped trend method.')

Figure 7.4: Level and slope components for Holt’s linear trend method and the additive damped trend method.

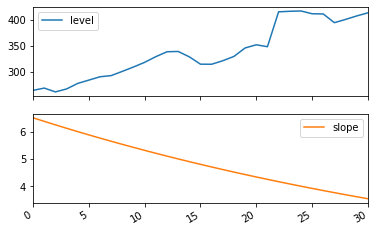

Comparison¶

Here we plot a comparison Simple Exponential Smoothing and Holt’s Methods for various additive, exponential and damped combinations. All of the models parameters will be optimized by statsmodels.

[7]:

fit1 = SimpleExpSmoothing(livestock2).fit()

fcast1 = fit1.forecast(9).rename("SES")

fit2 = Holt(livestock2).fit()

fcast2 = fit2.forecast(9).rename("Holt's")

fit3 = Holt(livestock2, exponential=True).fit()

fcast3 = fit3.forecast(9).rename("Exponential")

fit4 = Holt(livestock2, damped=True).fit(damping_slope=0.98)

fcast4 = fit4.forecast(9).rename("Additive Damped")

fit5 = Holt(livestock2, exponential=True, damped=True).fit()

fcast5 = fit5.forecast(9).rename("Multiplicative Damped")

ax = livestock2.plot(color="black", marker="o", figsize=(12,8))

livestock3.plot(ax=ax, color="black", marker="o", legend=False)

fcast1.plot(ax=ax, color='red', legend=True)

fcast2.plot(ax=ax, color='green', legend=True)

fcast3.plot(ax=ax, color='blue', legend=True)

fcast4.plot(ax=ax, color='cyan', legend=True)

fcast5.plot(ax=ax, color='magenta', legend=True)

ax.set_ylabel('Livestock, sheep in Asia (millions)')

plt.show()

print('Figure 7.5: Forecasting livestock, sheep in Asia: comparing forecasting performance of non-seasonal methods.')

Figure 7.5: Forecasting livestock, sheep in Asia: comparing forecasting performance of non-seasonal methods.

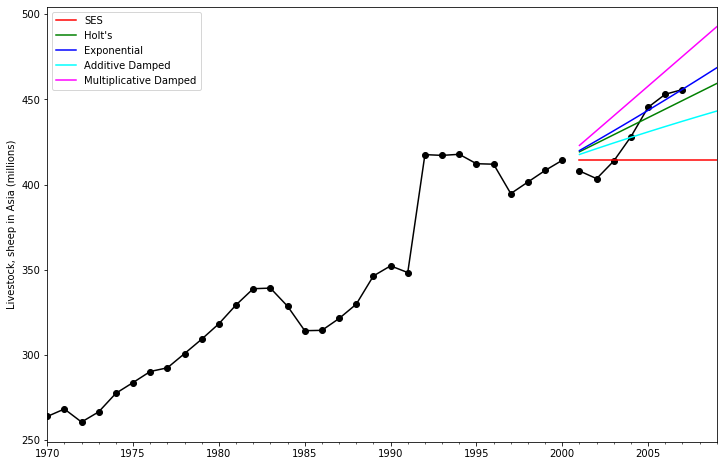

Holt’s Winters Seasonal¶

Finally we are able to run full Holt’s Winters Seasonal Exponential Smoothing including a trend component and a seasonal component. statsmodels allows for all the combinations including as shown in the examples below: 1. fit1 additive trend, additive seasonal of period season_length=4 and the use of a Box-Cox transformation. 1. fit2 additive trend, multiplicative seasonal of period season_length=4 and the use of a Box-Cox transformation.. 1. fit3 additive damped trend,

additive seasonal of period season_length=4 and the use of a Box-Cox transformation. 1. fit4 additive damped trend, multiplicative seasonal of period season_length=4 and the use of a Box-Cox transformation.

The plot shows the results and forecast for fit1 and fit2. The table allows us to compare the results and parameterizations.

[8]:

fit1 = ExponentialSmoothing(aust, seasonal_periods=4, trend='add', seasonal='add').fit(use_boxcox=True)

fit2 = ExponentialSmoothing(aust, seasonal_periods=4, trend='add', seasonal='mul').fit(use_boxcox=True)

fit3 = ExponentialSmoothing(aust, seasonal_periods=4, trend='add', seasonal='add', damped=True).fit(use_boxcox=True)

fit4 = ExponentialSmoothing(aust, seasonal_periods=4, trend='add', seasonal='mul', damped=True).fit(use_boxcox=True)

results=pd.DataFrame(index=[r"$\alpha$",r"$\beta$",r"$\phi$",r"$\gamma$",r"$l_0$","$b_0$","SSE"])

params = ['smoothing_level', 'smoothing_slope', 'damping_slope', 'smoothing_seasonal', 'initial_level', 'initial_slope']

results["Additive"] = [fit1.params[p] for p in params] + [fit1.sse]

results["Multiplicative"] = [fit2.params[p] for p in params] + [fit2.sse]

results["Additive Dam"] = [fit3.params[p] for p in params] + [fit3.sse]

results["Multiplica Dam"] = [fit4.params[p] for p in params] + [fit4.sse]

ax = aust.plot(figsize=(10,6), marker='o', color='black', title="Forecasts from Holt-Winters' multiplicative method" )

ax.set_ylabel("International visitor night in Australia (millions)")

ax.set_xlabel("Year")

fit1.fittedvalues.plot(ax=ax, style='--', color='red')

fit2.fittedvalues.plot(ax=ax, style='--', color='green')

fit1.forecast(8).rename('Holt-Winters (add-add-seasonal)').plot(ax=ax, style='--', marker='o', color='red', legend=True)

fit2.forecast(8).rename('Holt-Winters (add-mul-seasonal)').plot(ax=ax, style='--', marker='o', color='green', legend=True)

plt.show()

print("Figure 7.6: Forecasting international visitor nights in Australia using Holt-Winters method with both additive and multiplicative seasonality.")

results

/home/travis/build/statsmodels/statsmodels/statsmodels/tsa/holtwinters.py:725: ConvergenceWarning: Optimization failed to converge. Check mle_retvals.

ConvergenceWarning)

/home/travis/build/statsmodels/statsmodels/statsmodels/tsa/holtwinters.py:725: ConvergenceWarning: Optimization failed to converge. Check mle_retvals.

ConvergenceWarning)

/home/travis/build/statsmodels/statsmodels/statsmodels/tsa/holtwinters.py:725: ConvergenceWarning: Optimization failed to converge. Check mle_retvals.

ConvergenceWarning)

Figure 7.6: Forecasting international visitor nights in Australia using Holt-Winters method with both additive and multiplicative seasonality.

[8]:

| Additive | Multiplicative | Additive Dam | Multiplica Dam | |

|---|---|---|---|---|

| $\alpha$ | 4.546141e-01 | 3.662202e-01 | 9.082834e-09 | 0.000182 |

| $\beta$ | 5.824107e-09 | 6.273474e-20 | 1.550290e-11 | 0.000182 |

| $\phi$ | NaN | NaN | 9.431182e-01 | 0.913648 |

| $\gamma$ | 5.244166e-01 | 9.043075e-14 | 1.290373e-06 | 0.000000 |

| $l_0$ | 1.421752e+01 | 1.454882e+01 | 1.415855e+01 | 14.534854 |

| $b_0$ | 1.307774e-01 | 1.661481e-01 | 2.454178e-01 | 0.484784 |

| SSE | 5.001652e+01 | 4.307295e+01 | 3.527230e+01 | 39.711041 |

The Internals¶

It is possible to get at the internals of the Exponential Smoothing models.

Here we show some tables that allow you to view side by side the original values \(y_t\), the level \(l_t\), the trend \(b_t\), the season \(s_t\) and the fitted values \(\hat{y}_t\).

[9]:

df = pd.DataFrame(np.c_[aust, fit1.level, fit1.slope, fit1.season, fit1.fittedvalues],

columns=[r'$y_t$',r'$l_t$',r'$b_t$',r'$s_t$',r'$\hat{y}_t$'],index=aust.index)

df.append(fit1.forecast(8).rename(r'$\hat{y}_t$').to_frame(), sort=True)

[9]:

| $\hat{y}_t$ | $b_t$ | $l_t$ | $s_t$ | $y_t$ | |

|---|---|---|---|---|---|

| 2005-01-01 | 41.721221 | -34.969400 | 49.317697 | -7.593408 | 41.7275 |

| 2005-04-01 | 24.190248 | -35.452843 | 49.932487 | -25.834708 | 24.0418 |

| 2005-07-01 | 31.460568 | -36.532793 | 51.126161 | -19.182959 | 32.3281 |

| 2005-10-01 | 36.634784 | -37.397622 | 52.210084 | -15.209685 | 37.3287 |

| 2006-01-01 | 45.097632 | -38.467246 | 53.476749 | -7.837277 | 46.2132 |

| 2006-04-01 | 27.191721 | -40.276276 | 55.513814 | -27.017372 | 29.3463 |

| 2006-07-01 | 36.544194 | -40.625031 | 56.224457 | -19.713848 | 36.4829 |

| 2006-10-01 | 41.449477 | -42.041726 | 57.766045 | -15.523295 | 42.9777 |

| 2007-01-01 | 50.934454 | -41.543812 | 57.536734 | -7.588671 | 48.9015 |

| 2007-04-01 | 31.418231 | -42.197848 | 58.151014 | -26.874289 | 31.1802 |

| 2007-07-01 | 38.718348 | -42.303496 | 58.363010 | -20.191916 | 37.7179 |

| 2007-10-01 | 44.140665 | -41.085821 | 57.181923 | -14.982785 | 40.4202 |

| 2008-01-01 | 49.315841 | -42.961487 | 58.853001 | -8.619857 | 51.2069 |

| 2008-04-01 | 32.306999 | -43.186553 | 59.367006 | -27.309185 | 31.8872 |

| 2008-07-01 | 39.207465 | -44.826989 | 61.095601 | -20.925588 | 40.9783 |

| 2008-10-01 | 44.551262 | -44.899480 | 61.462177 | -17.319911 | 43.7725 |

| 2009-01-01 | 54.358086 | -46.192233 | 62.816830 | -7.881665 | 55.5586 |

| 2009-04-01 | 35.153854 | -45.980195 | 62.832171 | -28.447885 | 33.8509 |

| 2009-07-01 | 43.066497 | -46.228140 | 63.082678 | -20.550665 | 42.0764 |

| 2009-10-01 | 45.871207 | -46.852517 | 63.748866 | -17.997808 | 45.6423 |

| 2010-01-01 | 57.166623 | -48.770386 | 65.777575 | -7.372147 | 59.7668 |

| 2010-04-01 | 36.761380 | -48.308341 | 65.650021 | -29.816828 | 35.1919 |

| 2010-07-01 | 44.932496 | -48.798665 | 66.119447 | -21.517489 | 44.3197 |

| 2010-10-01 | 48.399587 | -49.269822 | 66.667428 | -18.522282 | 47.9137 |

| 2011-01-01 | 61.337982 | NaN | NaN | NaN | NaN |

| 2011-04-01 | 37.242908 | NaN | NaN | NaN | NaN |

| 2011-07-01 | 46.842670 | NaN | NaN | NaN | NaN |

| 2011-10-01 | 51.005290 | NaN | NaN | NaN | NaN |

| 2012-01-01 | 64.470874 | NaN | NaN | NaN | NaN |

| 2012-04-01 | 39.776989 | NaN | NaN | NaN | NaN |

| 2012-07-01 | 49.635936 | NaN | NaN | NaN | NaN |

| 2012-10-01 | 53.901538 | NaN | NaN | NaN | NaN |

[10]:

df = pd.DataFrame(np.c_[aust, fit2.level, fit2.slope, fit2.season, fit2.fittedvalues],

columns=[r'$y_t$',r'$l_t$',r'$b_t$',r'$s_t$',r'$\hat{y}_t$'],index=aust.index)

df.append(fit2.forecast(8).rename(r'$\hat{y}_t$').to_frame(), sort=True)

[10]:

| $\hat{y}_t$ | $b_t$ | $l_t$ | $s_t$ | $y_t$ | |

|---|---|---|---|---|---|

| 2005-01-01 | 41.858321 | -36.533965 | 51.248931 | 0.815816 | 41.7275 |

| 2005-04-01 | 25.838073 | -35.869635 | 50.739876 | 0.495331 | 24.0418 |

| 2005-07-01 | 31.657988 | -37.288171 | 52.065347 | 0.612930 | 32.3281 |

| 2005-10-01 | 35.189322 | -39.174586 | 54.193251 | 0.664127 | 37.3287 |

| 2006-01-01 | 44.929298 | -40.311833 | 55.712698 | 0.814974 | 46.2132 |

| 2006-04-01 | 27.934180 | -42.093979 | 57.763798 | 0.493011 | 29.3463 |

| 2006-07-01 | 35.825130 | -43.106909 | 59.134813 | 0.610035 | 36.4829 |

| 2006-10-01 | 39.769843 | -45.651690 | 61.915889 | 0.661649 | 42.9777 |

| 2007-01-01 | 51.176261 | -45.127249 | 61.863612 | 0.813514 | 48.9015 |

| 2007-04-01 | 30.814974 | -46.414917 | 63.142490 | 0.490255 | 31.1802 |

| 2007-07-01 | 39.009470 | -46.392257 | 63.333948 | 0.608168 | 37.7179 |

| 2007-10-01 | 42.486262 | -46.175681 | 63.149266 | 0.660387 | 40.4202 |

| 2008-01-01 | 52.173235 | -46.764685 | 63.707506 | 0.813308 | 51.2069 |

| 2008-04-01 | 31.677038 | -47.841457 | 64.877150 | 0.489512 | 31.8872 |

| 2008-07-01 | 40.035343 | -49.246026 | 66.475174 | 0.607618 | 40.9783 |

| 2008-10-01 | 44.516461 | -49.581199 | 67.072236 | 0.659526 | 43.7725 |

| 2009-01-01 | 55.343093 | -50.608812 | 68.196997 | 0.812689 | 55.5586 |

| 2009-04-01 | 33.773328 | -51.521924 | 69.292109 | 0.487836 | 33.8509 |

| 2009-07-01 | 42.644144 | -52.031931 | 69.978078 | 0.606317 | 42.0764 |

| 2009-10-01 | 46.778696 | -52.316747 | 70.372503 | 0.658637 | 45.6423 |

| 2010-01-01 | 58.008481 | -54.101175 | 72.219744 | 0.812212 | 59.7668 |

| 2010-04-01 | 35.648733 | -54.506659 | 72.917404 | 0.486477 | 35.1919 |

| 2010-07-01 | 44.784147 | -55.170721 | 73.690973 | 0.605343 | 44.3197 |

| 2010-10-01 | 49.174718 | -55.395841 | 74.036986 | 0.657769 | 47.9137 |

| 2011-01-01 | 60.966729 | NaN | NaN | NaN | NaN |

| 2011-04-01 | 36.993678 | NaN | NaN | NaN | NaN |

| 2011-07-01 | 46.711883 | NaN | NaN | NaN | NaN |

| 2011-10-01 | 51.482746 | NaN | NaN | NaN | NaN |

| 2012-01-01 | 64.455796 | NaN | NaN | NaN | NaN |

| 2012-04-01 | 39.017212 | NaN | NaN | NaN | NaN |

| 2012-07-01 | 49.291377 | NaN | NaN | NaN | NaN |

| 2012-10-01 | 54.320260 | NaN | NaN | NaN | NaN |

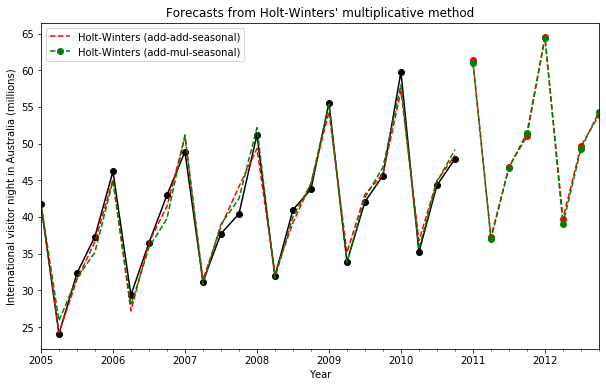

Finally lets look at the levels, slopes/trends and seasonal components of the models.

[11]:

states1 = pd.DataFrame(np.c_[fit1.level, fit1.slope, fit1.season], columns=['level','slope','seasonal'], index=aust.index)

states2 = pd.DataFrame(np.c_[fit2.level, fit2.slope, fit2.season], columns=['level','slope','seasonal'], index=aust.index)

fig, [[ax1, ax4],[ax2, ax5], [ax3, ax6]] = plt.subplots(3, 2, figsize=(12,8))

states1[['level']].plot(ax=ax1)

states1[['slope']].plot(ax=ax2)

states1[['seasonal']].plot(ax=ax3)

states2[['level']].plot(ax=ax4)

states2[['slope']].plot(ax=ax5)

states2[['seasonal']].plot(ax=ax6)

plt.show()